Probability is a well-established mathematical branch of high importance. In mathematics probability is calculated with consistency with a set of axioms. Sometimes uncertainty is defined by the statisticians according to probability rules.

For example: Suppose Bob plans to dine with Alice in the evening: there is 1/10 chance the he will not be available. Since the total probability is 1 (Kolmogorov 2nd axiom), therefore there is 9/10 chance that they will dine together and 1/10 that they will not. If there is a chance of 1/2 that Bob will not be available, the total probability is still 1, but now it comprises of a probability of 1/2 for a joint dinner. In General, if the probability that Bob will not be available is p, it implies that the probability of the joint dinner is 1-p.

In this example some statisticians may say that the uncertainty of having a joint dinner in the first case is 10%, and 50% in the second. This is not correct.

Uncertainty is defined by its Shannon’s entropy and its expression for the joint dinner is,

-plnp-(1-p)ln(1-p).

Usually engineers use the logarithm in base 2 and the uncertainty is expressed in bits. If p=1/2 then the uncertainty is 1 bit (one or zero). If p=1/10 then the uncertainty is 0.46 bit, namely, it is little less than half a bit. The entropy is a physical quantity which is a function of a mathematical quantity p, but unlike mathematical quantities that exist in a formal mathematical space defined by its axioms, entropy is bounded by a physical law, the second law of thermodynamics. Namely, entropy tends to increase to its maximum.

The maximum value of S, in our example, is ln2 when p=1/2. Does it mean that nature prefers the chance of Bob not being available for dinner with Alice to be 1/2, where the entropy is at its maximum? The answer, surprisingly for a mathematician, is yes! If we will examine many events of this nature we will see a (bell-like) distribution that has a pick at the value p=1/2.

Similarly, the average of many polls in which one picks, randomly, 1 out of 3 choices, will be a distribution of 50%:29%:21% and not 33%:33%:33% as is expected from simple probability calculations. Laws of nature (the second law) can tell us something about the probabilities of probabilities. The function that describes the most probable distribution of the various events is called the distribution function.

The distribution functions in nature that are the result of the tendency of entropy to maximize are, among others:

- Bell like distributions for humans: mortality, heights, IQ etc.

- Long tail distributions for humans: Zipf law in networks and texts, Benford’s law in numbers, Pareto Law for wealth etc.

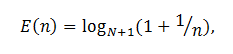

is the income rank and E(

) is the relative income of the rank to the wealth distribution in the OECD countries, we obtain a remarkable agreement.